Abstract

Music offers unique opportunities for creating a positive impact on the brain–by itself, it can make us smile, cry, march to a beat or lull us to sleep. Listening to music recruits functioning from many parts of the brain, from the brainstem (e.g., Wong et al., 2007), to primary and surrounding auditory cortices (e.g., Bermudez et al., 2009; Schneider et al., 2002), to higher-order areas of the prefrontal cortex (e.g., Lehne et al., 2014).

The central idea behind ‘Musical Phase Locking’ is to optimize the timing of neural processing. In essence, re-organizing the brain’s internal communication.

Specifically, this framework capitalizes on three main precepts:

- The brain is organized into networks that communicate through electrical activity to engender cognition.

- Audio signal is capable of synchronizing this neuronal activity (Schön, D. & Tillmann, B., 2015)

- Synchronized brain networks communicate differently and potentially more efficiently, which may have implications for subsequent behaviors.

Background

The brain is organized into widely distributed networks that tend to respond together (Lee, M. H., et al., 2013). For example the dorsal attention network, the salience network, and so on. These networks communicate through rhythmic electrical activity called neuronal oscillations (Siegel, M., Donner, T. H. & Engel, A. K., 2012). This activity regulates the occurrence of action potentials–the communication between neurons. Neuronal oscillations can be seen as a ‘middle man’ linking individual neuron activity to behavior or cognition (Schön, D. & Tillmann, B., 2015).

Auditory signals have been shown to influence the tendency of neuronal oscillations in such a way that they synchronize to the temporal regularities inherent in the stimulus (Luo & Poeppel, 2007; Doelling & Poeppel, 2015). Rhythms and beats in music can cause corresponding ‘electrical beat’ in the brain, effectively creating artificial neuronal oscillations. The brain networks processing these oscillations will try to reach more efficient low-energy states by synchronizing or ‘entraining’ to the beat (Woods, K., 2019). This results in what is called neural phase locking, where networks become more organized, or ‘beat to the same drum’ (der Nederlanden, C. M. V. et a.l, 2020; Tal, I. et al., 2017).

We at Evoked Response call this Musical Phase Locking.

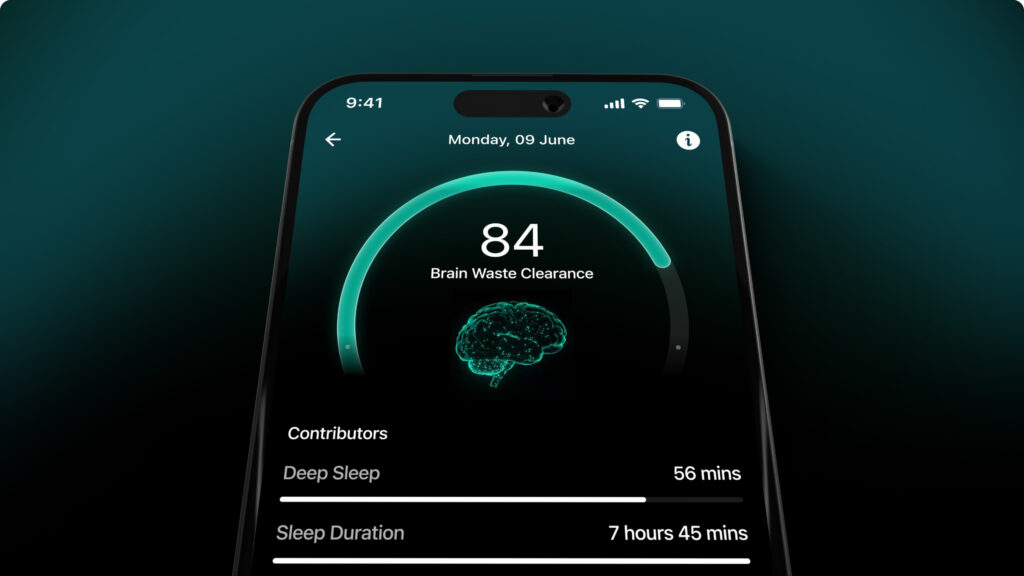

The Musical Phase Locking process can accomplish multiple goals. Mounting evidence has shown that specific patterns of neuronal oscillations can be seen as fingerprints of cognitive processes (Buzsaki, G., 2004), such as attention, sleep and memory consolidation (Papalambros et al., 2017, Ngo et al., 2013). Mental states can thus be altered by aligning neuronal oscillations to a different rhythmic stream

Secondly, Musical Phase Locking can facilitate more efficient sensory and cognitive processing by creating predictable cycles of high and low excitability, such that neuron-to-neuron communication happens in waves, with a high excitability phase followed by a deeper phase of rest. In this way, neural communication takes less energy (Engel, A. K. & Fries, P., 2010). To put it another way, it becomes easier to think harder.

The hypothesis that musical structure can cause phase locking in the brain has wide empirical support (Jones, M. R. et al., 2002, der Nederlanden, C. M. V. et a.l, 2020).

Audio is used to optimize attention in paired tasks with visual stimuli (Jones, M. R., 1976). It has been shown to increase memory consolidation during sleep when timed to existing neuronal oscillations (Papalambros et al., 2017, Ngo et al., 2013). More pertinent to this discussion, there is mounting evidence that music alone can entrain neuronal oscillations (Bolger, D. et al., 2013) and affect a variety of brain networks (Sachs et al., 2020).

Methods

In order for music to reliably cause Neural Phase Locking, it must be composed from the ground up to meet specific conditions:

- The music as whole should be richly textured, providing a broad frequency spectrum as a carrier for the neural stimulation.

- Every instrument must be quantized to predetermined dominant rhythms that adhere to the meter and BPM of the music. Quantization involves synchronizing the phase of notes to a chosen beat. The meter, BPM and dominant rhythms are determined in the testing phase.

- The duty cycle of all instruments–the attack, decay, sustain and release (ADSR)–must also be synchronized between instruments, within a margin of error. For example, the attack of a violin is often very different from that of a guitar. The use of modern audio processing techniques to account for these differences is essential if the brain is expected to entrain to the dominant rhythms of the track.

Testing & Iteration

A rigorous scientific testing process is the most important part of our evidence-based approach. It is important to note that the testing cycle never ends; we are constantly testing new tracks and new methodologies.

Our process can be outlined in the following steps:

1. First, we draw from scientific literature and experience to create hypotheses about what musical elements will work best for a particular purpose, or for a particular subset of individuals. For example, we might hypothesize that very rapid rhythms would help someone focus or workout, vs very slow rhythms for relaxation or sleep.

Every hypothesis has two parts: (1) a neuroimaging result, and (2) a behavioral result. Relying on both physiological and behavioral data allows us to rapidly iterate on modern innovations in the field.

2. The music itself is created by a team versed in neuroscience and psychophysics, and composed with a hypothesis in mind.

3. The first round of testing is done using neuroimaging equipment such as an EEG or fMRI, while subjects are resting or performing a task. Recent advancements in EEG neuroimaging equipment have made this process easier than ever, allowing us to gather medical grade data outside of a lab, in real-life environments–faster and with more subjects.

We test our assumptions by looking for telltale signs of organized neuronal communication that is statistically different between an experimental group or condition and a control group or condition. If none is found, we turn back to modify our hypothesis and iterate on the composition.

4. If we see a result from neuroimaging, we then initiate large behavioral studies with hundreds of subjects. Typically, we will have subjects perform a task while listening to music. Or, if we are trying to examine the effects on relaxation or sleep, we will use standardized surveys or indexes.

5. Once the music is ready for its debut on the app, usage analytics are fed back into the testing and iteration process where the steps begin again. Music is constantly updated according to the latest results.

6. Finally, we reach out to the greater scientific community with our data, and are planning the release of our results in scientific literature.

References

- Wong, P.C.M., Skoe, E., Russo, N.M., Dees, T., Kraus, N.

- Musical experience shapes human brainstem encoding of linguistic pitch patterns

- Nat. Neurosci., 10 (2007), pp. 420-422

- Bermudez, P., Lerch, J.P., Evans, A.C., Zatorre, R.J.

- Neuroanatomical correlates of musicianship as revealed by cortical thickness and voxel-based morphometry